ModelBench - Alternatives & Competitors

No-Code LLM Evaluations

ModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

Ranked by Relevance

-

1

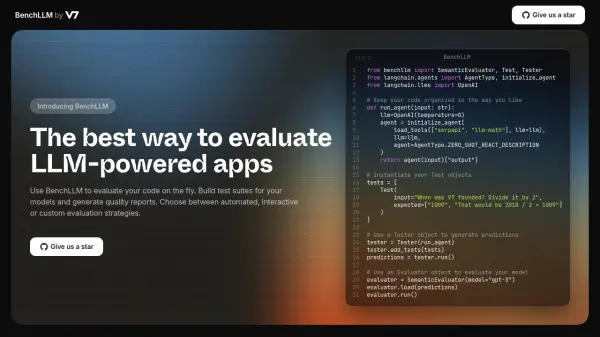

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

2

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

3

Compare AI Models AI Model Comparison Tool

Compare AI Models AI Model Comparison ToolCompare AI Models is a platform providing comprehensive comparisons and insights into various large language models, including GPT-4o, Claude, Llama, and Mistral.

- Freemium

-

4

LLM Explorer Discover and Compare Open-Source Language Models

LLM Explorer Discover and Compare Open-Source Language ModelsLLM Explorer is a comprehensive platform for discovering, comparing, and accessing over 46,000 open-source Large Language Models (LLMs) and Small Language Models (SLMs).

- Free

-

5

OneLLM Fine-tune, evaluate, and deploy your next LLM without code.

OneLLM Fine-tune, evaluate, and deploy your next LLM without code.OneLLM is a no-code platform enabling users to fine-tune, evaluate, and deploy Large Language Models (LLMs) efficiently. Streamline LLM development by creating datasets, integrating API keys, running fine-tuning processes, and comparing model performance.

- Freemium

- From 19$

-

6

Braintrust The end-to-end platform for building world-class AI apps.

Braintrust The end-to-end platform for building world-class AI apps.Braintrust provides an end-to-end platform for developing, evaluating, and monitoring Large Language Model (LLM) applications. It helps teams build robust AI products through iterative workflows and real-time analysis.

- Freemium

- From 249$

-

7

Prompt Hippo Test and Optimize LLM Prompts with Science.

Prompt Hippo Test and Optimize LLM Prompts with Science.Prompt Hippo is an AI-powered testing suite for Large Language Model (LLM) prompts, designed to improve their robustness, reliability, and safety through side-by-side comparisons.

- Freemium

- From 100$

-

8

Adaline Ship reliable AI faster

Adaline Ship reliable AI fasterAdaline is a collaborative platform for teams building with Large Language Models (LLMs), enabling efficient iteration, evaluation, deployment, and monitoring of prompts.

- Contact for Pricing

-

9

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidence

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidenceHumanloop is an enterprise-grade platform that provides tools for LLM evaluation, prompt management, and AI observability, enabling teams to develop, evaluate, and deploy trustworthy AI applications.

- Freemium

-

10

Prompt Octopus LLM evaluations directly in your codebase

Prompt Octopus LLM evaluations directly in your codebasePrompt Octopus is a VSCode extension allowing developers to select prompts, choose from 40+ LLMs, and compare responses side-by-side within their codebase.

- Freemium

- From 10$

-

11

Promptech The AI teamspace to streamline your workflows

Promptech The AI teamspace to streamline your workflowsPromptech is a collaborative AI platform that provides prompt engineering tools and teamspace solutions for organizations to effectively utilize Large Language Models (LLMs). It offers access to multiple AI models, workspace management, and enterprise-ready features.

- Paid

- From 20$

-

12

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

13

SysPrompt The collaborative prompt CMS for LLM engineers

SysPrompt The collaborative prompt CMS for LLM engineersSysPrompt is a collaborative Content Management System (CMS) designed for LLM engineers to manage, version, and collaborate on prompts, facilitating faster development of better LLM applications.

- Paid

-

14

OpenRouter A unified interface for LLMs

OpenRouter A unified interface for LLMsOpenRouter provides a unified interface for accessing and comparing various Large Language Models (LLMs), offering users the ability to find optimal models and pricing for their specific prompts.

- Usage Based

-

15

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

-

16

Langtail The low-code platform for testing AI apps

Langtail The low-code platform for testing AI appsLangtail is a comprehensive testing platform that enables teams to test and debug LLM-powered applications with a spreadsheet-like interface, offering security features and integration with major LLM providers.

- Freemium

- From 99$

-

17

MIOSN Stop overthinking LLMs. Find the optimal model at the lowest cost.

MIOSN Stop overthinking LLMs. Find the optimal model at the lowest cost.MIOSN helps users find the most suitable and cost-effective Large Language Model (LLM) for their specific tasks by analyzing and comparing different models.

- Free

-

18

Promptotype The platform for structured prompt engineering

Promptotype The platform for structured prompt engineeringPromptotype is a platform designed for structured prompt engineering, enabling users to develop, test, and monitor LLM tasks efficiently.

- Freemium

- From 6$

-

19

Hegel AI Developer Platform for Large Language Model (LLM) Applications

Hegel AI Developer Platform for Large Language Model (LLM) ApplicationsHegel AI provides a developer platform for building, monitoring, and improving large language model (LLM) applications, featuring tools for experimentation, evaluation, and feedback integration.

- Contact for Pricing

-

20

Intura Compare, Choose, and Save on AI & LLMs

Intura Compare, Choose, and Save on AI & LLMsIntura helps businesses experiment with, compare, and deploy AI and LLM models side-by-side to optimize performance and cost before full-scale implementation.

- Freemium

-

21

neutrino AI Multi-model AI Infrastructure for Optimal LLM Performance

neutrino AI Multi-model AI Infrastructure for Optimal LLM PerformanceNeutrino AI provides multi-model AI infrastructure to optimize Large Language Model (LLM) performance for applications. It offers tools for evaluation, intelligent routing, and observability to enhance quality, manage costs, and ensure scalability.

- Usage Based

-

22

OpenLIT Open Source Platform for AI Engineering

OpenLIT Open Source Platform for AI EngineeringOpenLIT is an open-source observability platform designed to streamline AI development workflows, particularly for Generative AI and LLMs, offering features like prompt management, performance tracking, and secure secrets management.

- Other

-

23

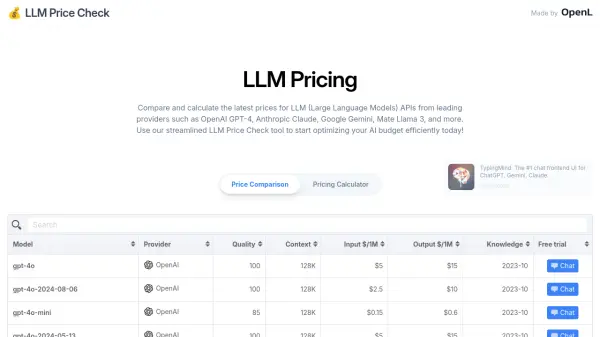

LLM Price Check Compare LLM Prices Instantly

LLM Price Check Compare LLM Prices InstantlyLLM Price Check allows users to compare and calculate prices for Large Language Model (LLM) APIs from providers like OpenAI, Anthropic, Google, and more. Optimize your AI budget efficiently.

- Free

-

24

Promptmetheus Forge better LLM prompts for your AI applications and workflows

Promptmetheus Forge better LLM prompts for your AI applications and workflowsPromptmetheus is a comprehensive prompt engineering IDE that helps developers and teams create, test, and optimize language model prompts with support for 100+ LLMs and popular inference APIs.

- Freemium

- From 29$

-

25

Weavel Automate Prompt Engineering 50x Faster

Weavel Automate Prompt Engineering 50x FasterWeavel optimizes prompts for LLM applications, achieving significantly higher performance than manual methods. Streamline your workflow and enhance your AI's accuracy with just a few lines of code.

- Freemium

- From 250$

-

26

klu.ai Next-gen LLM App Platform for Confident AI Development

klu.ai Next-gen LLM App Platform for Confident AI DevelopmentKlu is an all-in-one LLM App Platform that enables teams to experiment, version, and fine-tune GPT-4 Apps with collaborative prompt engineering and comprehensive evaluation tools.

- Freemium

- From 30$

-

27

Narrow AI Take the Engineer out of Prompt Engineering

Narrow AI Take the Engineer out of Prompt EngineeringNarrow AI autonomously writes, monitors, and optimizes prompts for any large language model, enabling faster AI feature deployment and reduced costs.

- Contact for Pricing

-

28

LMSYS Org Developing open, accessible, and scalable large model systems

LMSYS Org Developing open, accessible, and scalable large model systemsLMSYS Org is a leading organization dedicated to developing and evaluating large language models and systems, offering open-source tools and frameworks for AI research and implementation.

- Free

-

29

LangWatch Monitor, Evaluate & Optimize your LLM performance with 1-click

LangWatch Monitor, Evaluate & Optimize your LLM performance with 1-clickLangWatch empowers AI teams to ship 10x faster with quality assurance at every step. It provides tools to measure, maximize, and easily collaborate on LLM performance.

- Paid

- From 59$

-

30

promptfoo Test & secure your LLM apps with open-source LLM testing

promptfoo Test & secure your LLM apps with open-source LLM testingpromptfoo is an open-source LLM testing tool designed to help developers secure and evaluate their language model applications, offering features like vulnerability scanning and continuous monitoring.

- Freemium

-

31

llmChef Perfect AI responses with zero effort

llmChef Perfect AI responses with zero effortllmChef is an AI enrichment engine that provides access to over 100 pre-made prompts (recipes) and leading LLMs, enabling users to get optimal AI responses without crafting perfect prompts.

- Paid

- From 5$

-

32

Parea Test and Evaluate your AI systems

Parea Test and Evaluate your AI systemsParea is a platform for testing, evaluating, and monitoring Large Language Model (LLM) applications, helping teams track experiments, collect human feedback, and deploy prompts confidently.

- Freemium

- From 150$

-

33

Keywords AI LLM monitoring for AI startups

Keywords AI LLM monitoring for AI startupsKeywords AI is a comprehensive developer platform for LLM applications, offering monitoring, debugging, and deployment tools. It serves as a Datadog-like solution specifically designed for LLM applications.

- Freemium

- From 7$

-

34

EvalsOne Evaluate LLMs & RAG Pipelines Quickly

EvalsOne Evaluate LLMs & RAG Pipelines QuicklyEvalsOne is a platform for rapidly evaluating Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) pipelines using various metrics.

- Freemium

- From 19$

-

35

Easybeam No-code AI agents for apps

Easybeam No-code AI agents for appsEasybeam is a no-code platform enabling product teams and founders to build, deploy, and manage personalized, app-ready AI agents quickly. Enhance user engagement and streamline workflows with intuitive AI feature creation.

- Free Trial

- From 50$

-

36

LLM Pricing A comprehensive pricing comparison tool for Large Language Models

LLM Pricing A comprehensive pricing comparison tool for Large Language ModelsLLM Pricing is a website that aggregates and compares pricing information for various Large Language Models (LLMs) from official AI providers and cloud service vendors.

- Free

-

37

Literal AI Ship reliable LLM Products

Literal AI Ship reliable LLM ProductsLiteral AI streamlines the development of LLM applications, offering tools for evaluation, prompt management, logging, monitoring, and more to build production-grade AI products.

- Freemium

-

38

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

39

Langfuse Open Source LLM Engineering Platform

Langfuse Open Source LLM Engineering PlatformLangfuse provides an open-source platform for tracing, evaluating, and managing prompts to debug and improve LLM applications.

- Freemium

- From 59$

-

40

Agenta End-to-End LLM Engineering Platform

Agenta End-to-End LLM Engineering PlatformAgenta is an LLM engineering platform offering tools for prompt engineering, versioning, evaluation, and observability in a single, collaborative environment.

- Freemium

- From 49$

-

41

Reprompt Collaborative prompt testing for confident AI deployment

Reprompt Collaborative prompt testing for confident AI deploymentReprompt is a developer-focused platform that enables efficient testing and optimization of AI prompts with real-time analysis and comparison capabilities.

- Usage Based

-

42

Requesty Develop, Deploy, and Monitor AI with Confidence

Requesty Develop, Deploy, and Monitor AI with ConfidenceRequesty is a platform for faster AI development, deployment, and monitoring. It provides tools for refining LLM applications, analyzing conversational data, and extracting actionable insights.

- Usage Based

-

43

PromptMage A Python framework for simplified LLM-based application development

PromptMage A Python framework for simplified LLM-based application developmentPromptMage is a Python framework that streamlines the development of complex, multi-step applications powered by Large Language Models (LLMs), offering version control, testing capabilities, and automated API generation.

- Other

-

44

LangDB The Fastest Enterprise AI Gateway for Secure, Governed, and Optimized AI Traffic.

LangDB The Fastest Enterprise AI Gateway for Secure, Governed, and Optimized AI Traffic.LangDB is an enterprise AI gateway designed to secure, govern, and optimize AI traffic across over 250 LLMs via a unified API. It helps reduce costs and enhance performance for AI workflows.

- Freemium

- From 49$

-

45

Model Context Chat Seamlessly connect LLM providers for secure, real-time AI chat interactions.

Model Context Chat Seamlessly connect LLM providers for secure, real-time AI chat interactions.Model Context Chat enables users to connect multiple large language model providers via a user-friendly chat interface, offering fast, secure, and scalable AI conversations.

- Other

-

46

Scorecard.io Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.

Scorecard.io Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.Scorecard.io is an evaluation platform designed for testing and validating production-ready Generative AI applications, including LLMs, RAG systems, agents, and chatbots. It supports the entire AI production lifecycle from experiment design to continuous evaluation.

- Contact for Pricing

-

47

Ottic QA for LLM products done right

Ottic QA for LLM products done rightOttic empowers tech and non-technical teams to test LLM applications, ensuring faster product development and enhanced reliability. Streamline your QA process and gain full visibility into your LLM application's behavior.

- Contact for Pricing

-

48

FinetuneDB AI Fine-tuning Platform to Create Custom LLMs

FinetuneDB AI Fine-tuning Platform to Create Custom LLMsFinetuneDB is an AI fine-tuning platform that allows teams to build, train, and deploy custom language models using their own data, improving performance and reducing costs.

- Freemium

-

49

LLM Optimize Rank Higher in AI Engines Recommendations

LLM Optimize Rank Higher in AI Engines RecommendationsLLM Optimize provides professional website audits to help you rank higher in LLMs like ChatGPT and Google's AI Overview, outranking competitors with tailored, actionable recommendations.

- Paid

-

50

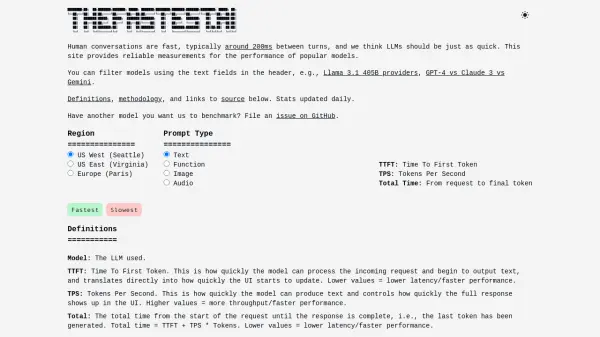

TheFastest.ai Reliable performance measurements for popular LLM models.

TheFastest.ai Reliable performance measurements for popular LLM models.TheFastest.ai provides reliable, daily updated performance benchmarks for popular Large Language Models (LLMs), measuring Time To First Token (TTFT) and Tokens Per Second (TPS) across different regions and prompt types.

- Free

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?