What is LangDB?

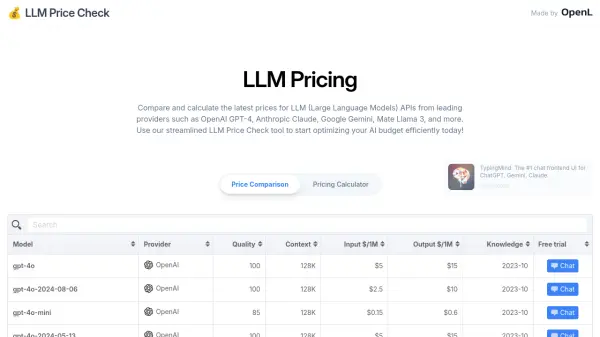

LangDB provides an enterprise-grade AI gateway solution, enabling businesses to manage and optimize their interactions with Large Language Models (LLMs). Built entirely in Rust, the platform prioritizes performance and scalability for handling significant AI traffic. It offers a unified, OpenAI-compatible API to access and govern interactions across more than 250 different LLM models, simplifying integration and model management within existing frameworks without requiring specific package installations like pip or npm.

The platform focuses heavily on cost control and operational efficiency. Features like smart routing automatically direct prompts to the most cost-effective model while maintaining performance, potentially reducing LLM expenses by up to 70%. LangDB includes advanced observability tools for monitoring complex AI agent workflows, granular cost management capabilities tracking expenses by model, project, or user, and comprehensive analytics for insights into LLM performance and usage patterns. An interactive playground facilitates prompt experimentation and comparison across models.

Features

- Unified API Access: Connect to and manage over 250+ LLM models through a single, OpenAI-compatible API.

- Smart Routing: Automatically routes prompts to the most cost-effective LLM while maintaining performance, reducing costs up to 70%.

- Advanced Observability: Monitor and trace complex AI agent interactions and workflows.

- Granular Cost Control: Set budgets and track AI spending at model, project, and user levels.

- Comprehensive LLM Analytics: Gain insights into performance metrics, usage patterns, and model effectiveness.

- Interactive Playground: Experiment with prompts across different models, compare outputs, and view associated costs.

- High Performance & Scalability: Built in Rust for enhanced speed and reliability compared to Python/JavaScript gateways.

- Guardrails: Implement controls for secure and compliant AI usage (available in paid tiers).

Use Cases

- Managing diverse LLM integrations across an enterprise.

- Optimizing and reducing operational costs for LLM usage.

- Monitoring and debugging complex AI agent workflows.

- Governing AI traffic for security and compliance.

- Comparing performance and cost across different LLMs.

- Streamlining AI development and deployment with a unified access point.

- Analyzing LLM usage patterns for better resource allocation.

Related Queries

Helpful for people in the following professions

LangDB Uptime Monitor

Average Uptime

100%

Average Response Time

623.97 ms

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.