Scorecard.io - Alternatives & Competitors

Testing for production-ready LLM applications, RAG systems, Agents, Chatbots.

Scorecard.io is an evaluation platform designed for testing and validating production-ready Generative AI applications, including LLMs, RAG systems, agents, and chatbots. It supports the entire AI production lifecycle from experiment design to continuous evaluation.

Ranked by Relevance

-

1

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidence

Humanloop The LLM evals platform for enterprises to ship and scale AI with confidenceHumanloop is an enterprise-grade platform that provides tools for LLM evaluation, prompt management, and AI observability, enabling teams to develop, evaluate, and deploy trustworthy AI applications.

- Freemium

-

2

Agenta End-to-End LLM Engineering Platform

Agenta End-to-End LLM Engineering PlatformAgenta is an LLM engineering platform offering tools for prompt engineering, versioning, evaluation, and observability in a single, collaborative environment.

- Freemium

- From 49$

-

3

Reprompt Collaborative prompt testing for confident AI deployment

Reprompt Collaborative prompt testing for confident AI deploymentReprompt is a developer-focused platform that enables efficient testing and optimization of AI prompts with real-time analysis and comparison capabilities.

- Usage Based

-

4

Langtail The low-code platform for testing AI apps

Langtail The low-code platform for testing AI appsLangtail is a comprehensive testing platform that enables teams to test and debug LLM-powered applications with a spreadsheet-like interface, offering security features and integration with major LLM providers.

- Freemium

- From 99$

-

5

Langtrace Transform AI Prototypes into Enterprise-Grade Products

Langtrace Transform AI Prototypes into Enterprise-Grade ProductsLangtrace is an open-source observability and evaluations platform designed to help developers monitor, evaluate, and enhance AI agents for enterprise deployment.

- Freemium

- From 31$

-

6

LastMile AI Ship generative AI apps to production with confidence.

LastMile AI Ship generative AI apps to production with confidence.LastMile AI empowers developers to seamlessly transition generative AI applications from prototype to production with a robust developer platform.

- Contact for Pricing

- API

-

7

Bot Test Automated testing to build quality, reliability, and safety into your AI-based chatbot — with no code.

Bot Test Automated testing to build quality, reliability, and safety into your AI-based chatbot — with no code.Bot Test offers automated, no-code testing solutions for AI-based chatbots, ensuring quality, reliability, and security. It provides comprehensive testing, smart evaluation, and enterprise-level scalability.

- Freemium

- From 25$

-

8

ModelBench No-Code LLM Evaluations

ModelBench No-Code LLM EvaluationsModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

-

9

Prompt Hippo Test and Optimize LLM Prompts with Science.

Prompt Hippo Test and Optimize LLM Prompts with Science.Prompt Hippo is an AI-powered testing suite for Large Language Model (LLM) prompts, designed to improve their robustness, reliability, and safety through side-by-side comparisons.

- Freemium

- From 100$

-

10

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

11

Lisapet.ai AI Prompt testing suite for product teams

Lisapet.ai AI Prompt testing suite for product teamsLisapet.ai is an AI development platform designed to help product teams prototype, test, and deploy AI features efficiently by automating prompt testing.

- Paid

- From 9$

-

12

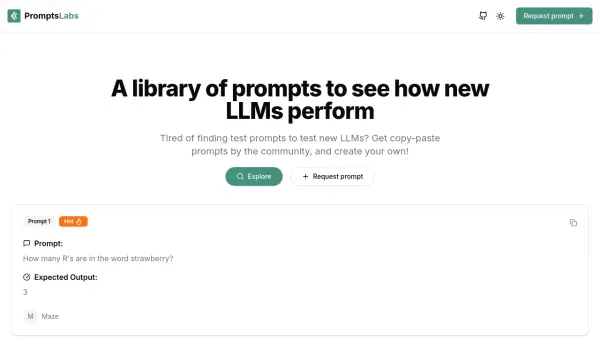

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

13

Braintrust The end-to-end platform for building world-class AI apps.

Braintrust The end-to-end platform for building world-class AI apps.Braintrust provides an end-to-end platform for developing, evaluating, and monitoring Large Language Model (LLM) applications. It helps teams build robust AI products through iterative workflows and real-time analysis.

- Freemium

- From 249$

-

14

Freeplay The All-in-One Platform for AI Experimentation, Evaluation, and Observability

Freeplay The All-in-One Platform for AI Experimentation, Evaluation, and ObservabilityFreeplay provides comprehensive tools for AI teams to run experiments, evaluate model performance, and monitor production, streamlining the development process.

- Paid

- From 500$

-

15

MLflow ML and GenAI made simple

MLflow ML and GenAI made simpleMLflow is an open-source, end-to-end MLOps platform for building better models and generative AI apps. It simplifies complex ML and generative AI projects, offering comprehensive management from development to production.

- Free

-

16

Relari Trusting your AI should not be hard

Relari Trusting your AI should not be hardRelari offers a contract-based development toolkit to define, inspect, and verify AI agent behavior using natural language, ensuring robustness and reliability.

- Freemium

- From 1000$

-

17

ech0 Hybrid Human-AI Testing for Safer AI Deployments

ech0 Hybrid Human-AI Testing for Safer AI Deploymentsech0 provides comprehensive, scalable testing for AI agents, identifying security vulnerabilities, consistency issues, and policy compliance before production deployment.

- Freemium

-

18

Literal AI Ship reliable LLM Products

Literal AI Ship reliable LLM ProductsLiteral AI streamlines the development of LLM applications, offering tools for evaluation, prompt management, logging, monitoring, and more to build production-grade AI products.

- Freemium

-

19

Okareo Error Discovery and Evaluation for AI Agents

Okareo Error Discovery and Evaluation for AI AgentsOkareo provides error discovery and evaluation tools for AI agents, enabling faster iteration, increased accuracy, and optimized performance through advanced monitoring and fine-tuning.

- Freemium

- From 199$

-

20

TestAI Automated AI Voice Agent Testing

TestAI Automated AI Voice Agent TestingTestAI is an automated platform that ensures the performance, accuracy, and reliability of voice and chat agents. It offers real-world simulations, scenario testing, and trust & safety reporting, delivering flawless AI evaluations in minutes.

- Paid

- From 12$

-

21

HoneyHive AI Observability and Evaluation Platform for Building Reliable AI Products

HoneyHive AI Observability and Evaluation Platform for Building Reliable AI ProductsHoneyHive is a comprehensive platform that provides AI observability, evaluation, and prompt management tools to help teams build and monitor reliable AI applications.

- Freemium

-

22

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

23

Adaline Ship reliable AI faster

Adaline Ship reliable AI fasterAdaline is a collaborative platform for teams building with Large Language Models (LLMs), enabling efficient iteration, evaluation, deployment, and monitoring of prompts.

- Contact for Pricing

-

24

Promptotype The platform for structured prompt engineering

Promptotype The platform for structured prompt engineeringPromptotype is a platform designed for structured prompt engineering, enabling users to develop, test, and monitor LLM tasks efficiently.

- Freemium

- From 6$

-

25

Future AGI World’s first comprehensive evaluation and optimization platform to help enterprises achieve 99% accuracy in AI applications across software and hardware.

Future AGI World’s first comprehensive evaluation and optimization platform to help enterprises achieve 99% accuracy in AI applications across software and hardware.Future AGI is a comprehensive evaluation and optimization platform designed to help enterprises build, evaluate, and improve AI applications, aiming for high accuracy across software and hardware.

- Freemium

- From 50$

-

26

Autoblocks Improve your LLM Product Accuracy with Expert-Driven Testing & Evaluation

Autoblocks Improve your LLM Product Accuracy with Expert-Driven Testing & EvaluationAutoblocks is a collaborative testing and evaluation platform for LLM-based products that automatically improves through user and expert feedback, offering comprehensive tools for monitoring, debugging, and quality assurance.

- Freemium

- From 1750$

-

27

Compare AI Models AI Model Comparison Tool

Compare AI Models AI Model Comparison ToolCompare AI Models is a platform providing comprehensive comparisons and insights into various large language models, including GPT-4o, Claude, Llama, and Mistral.

- Freemium

-

28

Prompt Mixer Open source tool for prompt engineering

Prompt Mixer Open source tool for prompt engineeringPrompt Mixer is a desktop application for teams to create, test, and manage AI prompts and chains across different language models, featuring version control and comprehensive evaluation tools.

- Freemium

- From 29$

-

29

EvalsOne Evaluate LLMs & RAG Pipelines Quickly

EvalsOne Evaluate LLMs & RAG Pipelines QuicklyEvalsOne is a platform for rapidly evaluating Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) pipelines using various metrics.

- Freemium

- From 19$

-

30

Hegel AI Developer Platform for Large Language Model (LLM) Applications

Hegel AI Developer Platform for Large Language Model (LLM) ApplicationsHegel AI provides a developer platform for building, monitoring, and improving large language model (LLM) applications, featuring tools for experimentation, evaluation, and feedback integration.

- Contact for Pricing

-

31

Flow AI The data engine for AI agent testing

Flow AI The data engine for AI agent testingFlow AI accelerates AI agent development by providing continuously evolving, validated test data grounded in real-world information and refined by domain experts.

- Contact for Pricing

-

32

Prompteus One Platform to Rule AI.

Prompteus One Platform to Rule AI.Prompteus enables users to build, manage, and scale production-ready AI workflows efficiently, offering observability, intelligent routing, and cost optimization.

- Freemium

-

33

Arize Unified Observability and Evaluation Platform for AI

Arize Unified Observability and Evaluation Platform for AIArize is a comprehensive platform designed to accelerate the development and improve the production of AI applications and agents.

- Freemium

- From 50$

-

34

Maxim Simulate, evaluate, and observe your AI agents

Maxim Simulate, evaluate, and observe your AI agentsMaxim is an end-to-end evaluation and observability platform designed to help teams ship AI agents reliably and more than 5x faster.

- Paid

- From 29$

-

35

Parea Test and Evaluate your AI systems

Parea Test and Evaluate your AI systemsParea is a platform for testing, evaluating, and monitoring Large Language Model (LLM) applications, helping teams track experiments, collect human feedback, and deploy prompts confidently.

- Freemium

- From 150$

-

36

Keywords AI LLM monitoring for AI startups

Keywords AI LLM monitoring for AI startupsKeywords AI is a comprehensive developer platform for LLM applications, offering monitoring, debugging, and deployment tools. It serves as a Datadog-like solution specifically designed for LLM applications.

- Freemium

- From 7$

-

37

Lumora Unlock AI Potential with Smart Prompt Management Tools

Lumora Unlock AI Potential with Smart Prompt Management ToolsLumora offers advanced tools to manage, optimize, and test AI prompts, ensuring efficient workflows and improved results across various AI platforms.

- Freemium

- From 15$

-

38

PromptsRoyale Optimize Your Prompts with AI-Powered Testing

PromptsRoyale Optimize Your Prompts with AI-Powered TestingPromptsRoyale refines and evaluates AI prompts through objective-based testing and scoring, ensuring optimal performance.

- Free

-

39

Evidently AI Collaborative AI observability platform for evaluating, testing, and monitoring AI-powered products

Evidently AI Collaborative AI observability platform for evaluating, testing, and monitoring AI-powered productsEvidently AI is a comprehensive AI observability platform that helps teams evaluate, test, and monitor LLM and ML models in production, offering data drift detection, quality assessment, and performance monitoring capabilities.

- Freemium

- From 50$

-

40

ManagePrompt Build AI-powered apps in minutes, not months

ManagePrompt Build AI-powered apps in minutes, not monthsManagePrompt provides the infrastructure for building and deploying AI projects, handling integration with top AI models, testing, authentication, analytics, and security.

- Free

-

41

OpenLIT Open Source Platform for AI Engineering

OpenLIT Open Source Platform for AI EngineeringOpenLIT is an open-source observability platform designed to streamline AI development workflows, particularly for Generative AI and LLMs, offering features like prompt management, performance tracking, and secure secrets management.

- Other

-

42

klu.ai Next-gen LLM App Platform for Confident AI Development

klu.ai Next-gen LLM App Platform for Confident AI DevelopmentKlu is an all-in-one LLM App Platform that enables teams to experiment, version, and fine-tune GPT-4 Apps with collaborative prompt engineering and comprehensive evaluation tools.

- Freemium

- From 30$

-

43

Latitude Open-source prompt engineering platform for reliable AI product delivery

Latitude Open-source prompt engineering platform for reliable AI product deliveryLatitude is an open-source platform that helps teams track, evaluate, and refine their AI prompts using real data, enabling confident deployment of AI products.

- Freemium

- From 99$

-

44

PromptMage A Python framework for simplified LLM-based application development

PromptMage A Python framework for simplified LLM-based application developmentPromptMage is a Python framework that streamlines the development of complex, multi-step applications powered by Large Language Models (LLMs), offering version control, testing capabilities, and automated API generation.

- Other

-

45

Rhesis AI Open-source test generation SDK for LLM applications

Rhesis AI Open-source test generation SDK for LLM applicationsRhesis AI offers an open-source SDK to generate comprehensive, context-specific test sets for LLM applications, enhancing AI evaluation, reliability, and compliance.

- Freemium

-

46

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

-

47

Promptrr.io AI Prompt Marketplace - ChatGPT, Bard, Leonardo & Midjourney Prompts

Promptrr.io AI Prompt Marketplace - ChatGPT, Bard, Leonardo & Midjourney PromptsPromptrr.io is an AI prompt marketplace where users can buy and sell prompts for various AI platforms like Midjourney, ChatGPT, Leonardo AI, and Gemini.

- Paid

-

48

Promptmetheus Forge better LLM prompts for your AI applications and workflows

Promptmetheus Forge better LLM prompts for your AI applications and workflowsPromptmetheus is a comprehensive prompt engineering IDE that helps developers and teams create, test, and optimize language model prompts with support for 100+ LLMs and popular inference APIs.

- Freemium

- From 29$

-

49

PromptOwl The Future of Work is Agentic

PromptOwl The Future of Work is AgenticPromptOwl is a no-code platform for building and managing AI-powered workflows and agents, enabling businesses to automate complex tasks and streamline operations across departments.

- Contact for Pricing

-

50

Lunary Where GenAI teams manage and improve LLM chatbots

Lunary Where GenAI teams manage and improve LLM chatbotsLunary is a comprehensive platform for AI developers to manage, monitor, and optimize LLM chatbots with advanced analytics, security features, and collaborative tools.

- Freemium

- From 20$

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?