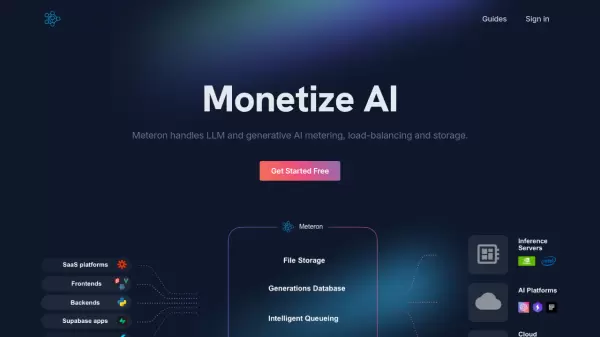

What is Meteron?

Meteron streamlines AI infrastructure management by providing essential tools for handling large language models and generative AI applications. The platform offers sophisticated metering mechanisms, elastic scaling capabilities, and unlimited cloud storage solutions, enabling developers to build and scale AI applications efficiently.

The platform supports integration with various AI models, including Llama, Mistral, Stable Diffusion, and DALL-E, while providing robust features such as request queuing, load balancing, and per-user metering. Its infrastructure is designed to handle high-demand spikes and ensure optimal resource allocation.

Features

- Metering System: Charge users per request or per token

- Elastic Scaling: Queue and load-balance requests across servers

- Cloud Storage: Unlimited storage with major cloud provider support

- Model Compatibility: Works with various text and image generation models

- User Limits: Per-user metering and usage tracking

- Load Management: Intelligent QoS and automatic load balancing

- Priority Queue: Support for different priority classes of users

- Cloud Integration: Support for custom cloud storage solutions

Use Cases

- Building multi-tenant AI applications

- Scaling image generation services

- Managing LLM-powered applications

- Implementing usage-based billing

- Handling high-demand AI services

- Cloud asset management

- User quota enforcement

FAQs

-

Do I need to use any special libraries when integrating Meteron?

No, you can use any standard HTTP client such as curl, Python requests, or JavaScript fetch libraries. Instead of sending requests to your inference endpoint, you'll send them to Meteron's generation API. -

How does the queue prioritization work?

Meteron provides standard business rules with three priority classes: high (VIP users with no queueing delays), medium (some delays but priority over low), and low (served last, typically for free users). -

Can I host Meteron server myself?

Yes, on-premise licenses are available. The system comes batteries included and can run on any cloud provider. -

How does per-user metering work?

When adding model endpoints in Meteron, you can specify daily and monthly limits. By adding the X-User header with user ID or email in image generation requests, Meteron ensures users cannot exceed these limits.

Related Queries

Helpful for people in the following professions

Meteron Uptime Monitor

Average Uptime

99.86%

Average Response Time

130.33 ms

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.