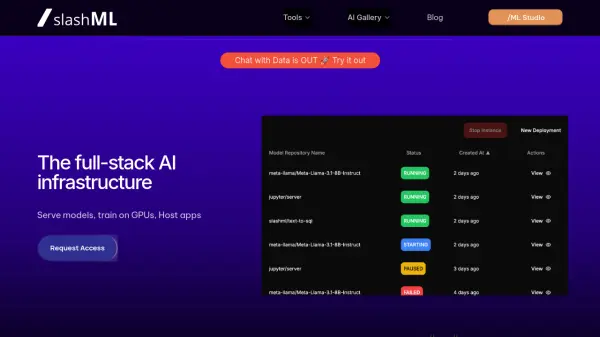

What is /ML?

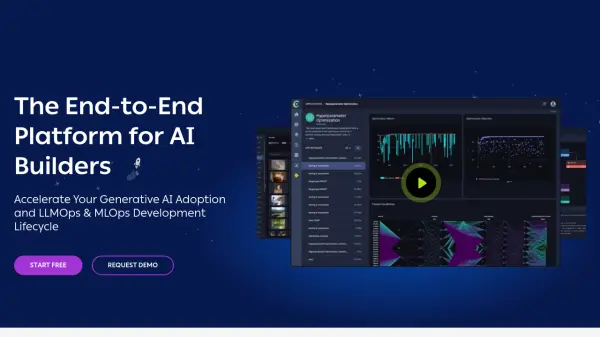

/ML offers a comprehensive full-stack AI infrastructure designed to streamline the development and deployment of artificial intelligence models. This platform simplifies complex configurations by providing Docker support and a user interface for various operations, allowing engineers to focus on innovation rather than infrastructure management. It supports serving Large Language Models (LLMs) available on Hugging Face and facilitates the training of multi-modal models, offering flexibility by allowing users to utilize /ML Workspaces or their own GPU resources.

Furthermore, /ML enables the hosting of interactive AI applications built with popular frameworks such as Streamlit, Gradio, and Dash, making it easy to share dashboards and apps with users. The platform also includes features for cost observability, providing clear insights into cloud spending to help manage and optimize AI project budgets effectively. This makes /ML a robust solution for engineers looking to scale their AI initiatives efficiently.

Features

- Large Language Model Serving: Serve Large Language Models (LLMs), especially those available on Hugging Face.

- Multi-modal Model Training: Train multi-modal models using either /ML Workspaces' GPUs or your own.

- AI Application Hosting: Host and share interactive applications built with Streamlit, Gradio, and Dash.

- Simplified Configuration: Utilizes Docker and a user interface for all configurations, reducing setup complexity.

- Cost Observability: Provides tools to monitor and gain clear insights into cloud spending for AI projects.

Use Cases

- Serving and scaling Large Language Models for various AI-driven applications.

- Training and deploying complex multi-modal AI models efficiently.

- Developing and hosting interactive AI dashboards and applications for end-users.

- Optimizing cloud expenditure for AI/ML workloads through detailed cost analysis.

- Streamlining the MLOps lifecycle from model development to deployment.

FAQs

-

What types of AI models can be managed with /ML?

/ML supports serving Large Language Models, especially those from Hugging Face, and allows for the training of multi-modal models. -

Does /ML provide its own GPU resources for model training?

Yes, you can train multi-modal models on /ML Workspaces (using their GPUs) or choose to use your own GPU resources. -

Which frameworks are supported for hosting AI applications on /ML?

/ML allows you to host AI applications built with popular frameworks such as Streamlit, Gradio, and Dash. -

How does /ML assist with managing the costs of AI projects?

/ML includes a cost observability feature that helps increase visibility into cloud spending and provides clear insights for better budget management. -

What makes /ML user-friendly for developers?

/ML simplifies complex configurations by providing Docker support and a user interface for most operations, allowing developers to focus on building and deploying models rather than infrastructure setup.

Related Queries

Helpful for people in the following professions

/ML Uptime Monitor

Average Uptime

100%

Average Response Time

210.53 ms

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.