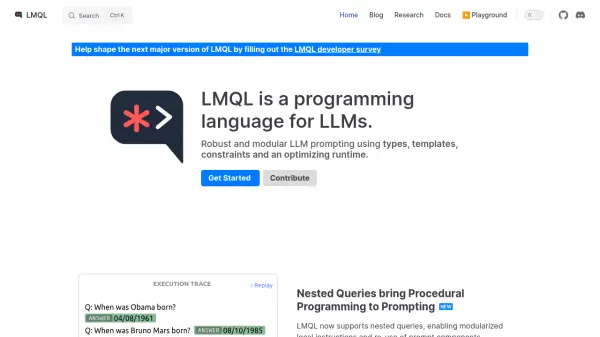

What is LMQL?

LMQL allows for robust and modular LLM prompting, employing types, templates, constraints, and an optimizing runtime. It provides a structured approach to interacting with large language models, enabling developers to define precise prompts and manage outputs effectively.

The language supports features such as nested queries, allowing for modularized local instructions and the reuse of prompt components. It seamlessly integrates with various backends, making LLM code portable across platforms like llama.cpp, OpenAI, and Hugging Face Transformers.

Features

- Nested Queries: Enables modularized prompting and reuse of prompt components.

- Constrained LLMs: Uses 'where' clauses to define constraints enforced by the runtime.

- Types and Regex: Supports typed variables for guaranteed output formats.

- Multi-Part Prompts: Allows for complex prompt construction using Python control flow.

- Meta Prompting: Facilitates advanced prompting techniques.

- Cross-Backend Compatibility: Supports multiple LLM backends (llama.cpp, OpenAI, Hugging Face Transformers).

- Python Integration: Seamlessly integrates with Python code.

Use Cases

- Developing chatbots with constrained response formats.

- Creating modular and reusable prompt components.

- Building applications requiring precise control over LLM output.

- Porting LLM applications across different backend providers.

- Implementing complex prompt logic using Python.

- Generating structured data from LLMs.

Related Queries

Helpful for people in the following professions

LMQL Uptime Monitor

Average Uptime

100%

Average Response Time

85.7 ms

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.