Open-source LLM development tools - AI tools

-

Axolotl AI We make fine-tuning accessible, scalable, fun

Axolotl AI We make fine-tuning accessible, scalable, funAxolotl AI is a free, open-source tool designed to make fine-tuning Large Language Models (LLMs) faster, more accessible, and scalable across various AI models and platforms.

- Free

-

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

Hegel AI Developer Platform for Large Language Model (LLM) Applications

Hegel AI Developer Platform for Large Language Model (LLM) ApplicationsHegel AI provides a developer platform for building, monitoring, and improving large language model (LLM) applications, featuring tools for experimentation, evaluation, and feedback integration.

- Contact for Pricing

-

Transformer Lab The Open Source Platform for Training Advanced AI Models

Transformer Lab The Open Source Platform for Training Advanced AI ModelsTransformer Lab is an open-source platform enabling researchers, ML engineers, and developers to collaboratively build, train, evaluate, and deploy AI models with features like provenance, reproducibility, and transparency.

- Free

-

Flowise Build LLM Apps Easily - Open Source Low-Code Tool for LLM Orchestration

Flowise Build LLM Apps Easily - Open Source Low-Code Tool for LLM OrchestrationFlowise is an open-source low-code platform that enables developers to build customized LLM orchestration flows and AI agents through a drag-and-drop interface.

- Freemium

- From 35$

-

Langfuse Open Source LLM Engineering Platform

Langfuse Open Source LLM Engineering PlatformLangfuse provides an open-source platform for tracing, evaluating, and managing prompts to debug and improve LLM applications.

- Freemium

- From 59$

-

Gorilla Large Language Model Connected with Massive APIs

Gorilla Large Language Model Connected with Massive APIsGorilla is an open-source Large Language Model (LLM) developed by UC Berkeley, designed to interact with a wide range of services by invoking API calls.

- Free

-

Missing Studio An open-source AI studio for rapid development and robust deployment of production-ready generative AI.

Missing Studio An open-source AI studio for rapid development and robust deployment of production-ready generative AI.Missing Studio is an open-source AI platform designed for developers to build and deploy generative AI applications. It offers tools for managing LLMs, optimizing performance, and ensuring reliability.

- Free

-

OpenTools The API for Enhanced LLM Tool Use

OpenTools The API for Enhanced LLM Tool UseOpenTools provides a unified API enabling developers to connect Large Language Models (LLMs) with a diverse ecosystem of tools, simplifying integration and management.

- Usage Based

-

Literal AI Ship reliable LLM Products

Literal AI Ship reliable LLM ProductsLiteral AI streamlines the development of LLM applications, offering tools for evaluation, prompt management, logging, monitoring, and more to build production-grade AI products.

- Freemium

-

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

phoenix.arize.com Open-source LLM tracing and evaluation

phoenix.arize.com Open-source LLM tracing and evaluationPhoenix accelerates AI development with powerful insights, allowing seamless evaluation, experimentation, and optimization of AI applications in real time.

- Freemium

-

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

Agenta End-to-End LLM Engineering Platform

Agenta End-to-End LLM Engineering PlatformAgenta is an LLM engineering platform offering tools for prompt engineering, versioning, evaluation, and observability in a single, collaborative environment.

- Freemium

- From 49$

-

EleutherAI Empowering Open-Source Artificial Intelligence Research

EleutherAI Empowering Open-Source Artificial Intelligence ResearchEleutherAI is a research institute focused on advancing and democratizing open-source AI, particularly in language modeling, interpretability, and alignment. They train, release, and evaluate powerful open-source LLMs.

- Free

-

Superpipe The OSS experimentation platform for LLM pipelines

Superpipe The OSS experimentation platform for LLM pipelinesSuperpipe is an open-source experimentation platform designed for building, evaluating, and optimizing Large Language Model (LLM) pipelines to improve accuracy and minimize costs. It allows deployment on user infrastructure for enhanced privacy and security.

- Free

-

LangChain Build and Deploy LLM-Powered Applications and Agents

LangChain Build and Deploy LLM-Powered Applications and AgentsLangChain is a comprehensive framework for developing and deploying applications powered by large language models (LLMs), enabling the creation of sophisticated AI agents, chatbots, and data analysis tools. It facilitates building context-aware and reasoning applications.

- Free

-

LM Studio Discover, download, and run local LLMs on your computer

LM Studio Discover, download, and run local LLMs on your computerLM Studio is a desktop application that allows users to run Large Language Models (LLMs) locally and offline, supporting various architectures including Llama, Mistral, Phi, Gemma, DeepSeek, and Qwen 2.5.

- Free

-

LMQL A programming language for LLMs.

LMQL A programming language for LLMs.LMQL is a programming language designed for large language models, offering robust and modular prompting with types, templates, and constraints.

- Free

-

LLM Explorer Discover and Compare Open-Source Language Models

LLM Explorer Discover and Compare Open-Source Language ModelsLLM Explorer is a comprehensive platform for discovering, comparing, and accessing over 46,000 open-source Large Language Models (LLMs) and Small Language Models (SLMs).

- Free

-

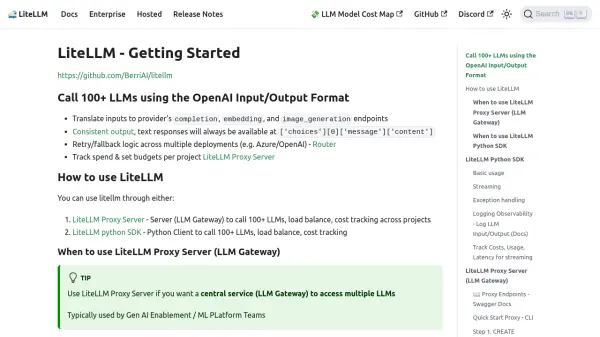

docs.litellm.ai Unified Interface for Accessing 100+ LLMs

docs.litellm.ai Unified Interface for Accessing 100+ LLMsLiteLLM provides a simplified and standardized way to interact with over 100 large language models (LLMs) using a consistent OpenAI-compatible input/output format.

- Free

-

Rig Build Modular and Scalable LLM Applications in Rust

Rig Build Modular and Scalable LLM Applications in RustRig is a Rust-based framework for building modular and scalable LLM applications. It offers a unified LLM interface, Rust-powered performance, and advanced AI workflow abstractions.

- Free

-

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.LlamaEdge is a lightweight and fast local LLM runtime and API server, powered by Rust & WasmEdge, designed for creating cross-platform LLM agents and web services.

- Free

-

Petals Run large language models at home, BitTorrent‑style.

Petals Run large language models at home, BitTorrent‑style.Petals enables users to run large language models like Llama 3.1 and Mixtral collaboratively, distributing the load across a network of consumer-grade GPUs.

- Free

-

Keywords AI LLM monitoring for AI startups

Keywords AI LLM monitoring for AI startupsKeywords AI is a comprehensive developer platform for LLM applications, offering monitoring, debugging, and deployment tools. It serves as a Datadog-like solution specifically designed for LLM applications.

- Freemium

- From 7$

-

LLMStack Open-source platform to build AI Agents, workflows and applications with your data

LLMStack Open-source platform to build AI Agents, workflows and applications with your dataLLMStack is an open-source development platform that enables users to build AI agents, workflows, and applications by integrating various model providers and custom data sources.

- Other

-

Lora Integrate local LLM with one line of code.

Lora Integrate local LLM with one line of code.Lora provides an SDK for integrating a fine-tuned, mobile-optimized local Large Language Model (LLM) into applications with minimal setup, offering GPT-4o-mini level performance.

- Freemium

-

aider AI Pair Programming in Your Terminal

aider AI Pair Programming in Your TerminalAider is a command-line tool that enables pair programming with LLMs to edit code in your local git repository. It supports various LLMs and offers top-tier performance on software engineering benchmarks.

- Free

-

LlamaHub Kickstart Your RAG Application with Data Loaders and Agent Tools

LlamaHub Kickstart Your RAG Application with Data Loaders and Agent ToolsLlamaHub is a repository providing data loaders, agent tools, and LlamaPacks to quickly build and customize Retrieval-Augmented Generation (RAG) applications using frameworks like LlamaIndex and LangChain.

- Free

-

WebLLM High-Performance In-Browser LLM Inference Engine

WebLLM High-Performance In-Browser LLM Inference EngineWebLLM enables running large language models (LLMs) directly within a web browser using WebGPU for hardware acceleration, reducing server costs and enhancing privacy.

- Free

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

speech to text for multiple languages 26 tools

-

Online meeting recorder 9 tools

-

email generator online 15 tools

-

ai powered technical documentation tool 43 tools

-

dating message help 11 tools

-

serverless AI API platform 21 tools

-

SaaS customer support software 44 tools

-

AI app development without coding 60 tools

-

Unique QR code generator 21 tools

Didn't find tool you were looking for?