LLM prompt testing tool - AI tools

-

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

Promptotype The platform for structured prompt engineering

Promptotype The platform for structured prompt engineeringPromptotype is a platform designed for structured prompt engineering, enabling users to develop, test, and monitor LLM tasks efficiently.

- Freemium

- From 6$

-

ModelBench No-Code LLM Evaluations

ModelBench No-Code LLM EvaluationsModelBench enables teams to rapidly deploy AI solutions with no-code LLM evaluations. It allows users to compare over 180 models, design and benchmark prompts, and trace LLM runs, accelerating AI development.

- Free Trial

- From 49$

-

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

-

SysPrompt The collaborative prompt CMS for LLM engineers

SysPrompt The collaborative prompt CMS for LLM engineersSysPrompt is a collaborative Content Management System (CMS) designed for LLM engineers to manage, version, and collaborate on prompts, facilitating faster development of better LLM applications.

- Paid

-

Prompt Hippo Test and Optimize LLM Prompts with Science.

Prompt Hippo Test and Optimize LLM Prompts with Science.Prompt Hippo is an AI-powered testing suite for Large Language Model (LLM) prompts, designed to improve their robustness, reliability, and safety through side-by-side comparisons.

- Freemium

- From 100$

-

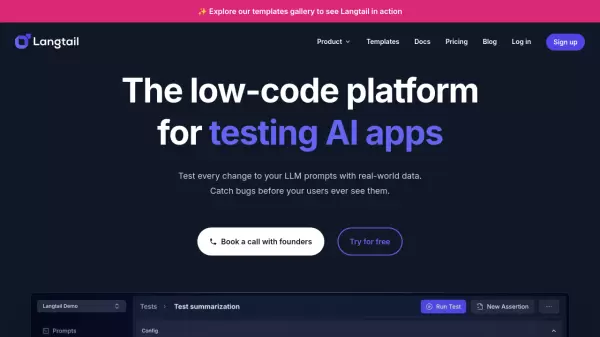

Langtail The low-code platform for testing AI apps

Langtail The low-code platform for testing AI appsLangtail is a comprehensive testing platform that enables teams to test and debug LLM-powered applications with a spreadsheet-like interface, offering security features and integration with major LLM providers.

- Freemium

- From 99$

-

PromptMage A Python framework for simplified LLM-based application development

PromptMage A Python framework for simplified LLM-based application developmentPromptMage is a Python framework that streamlines the development of complex, multi-step applications powered by Large Language Models (LLMs), offering version control, testing capabilities, and automated API generation.

- Other

-

promptfoo Test & secure your LLM apps with open-source LLM testing

promptfoo Test & secure your LLM apps with open-source LLM testingpromptfoo is an open-source LLM testing tool designed to help developers secure and evaluate their language model applications, offering features like vulnerability scanning and continuous monitoring.

- Freemium

-

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

Adaline Ship reliable AI faster

Adaline Ship reliable AI fasterAdaline is a collaborative platform for teams building with Large Language Models (LLMs), enabling efficient iteration, evaluation, deployment, and monitoring of prompts.

- Contact for Pricing

-

Literal AI Ship reliable LLM Products

Literal AI Ship reliable LLM ProductsLiteral AI streamlines the development of LLM applications, offering tools for evaluation, prompt management, logging, monitoring, and more to build production-grade AI products.

- Freemium

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

speech to text for multiple languages 26 tools

-

Online meeting recorder 9 tools

-

email generator online 15 tools

-

ai powered technical documentation tool 43 tools

-

dating message help 11 tools

-

serverless AI API platform 21 tools

-

SaaS customer support software 44 tools

-

AI app development without coding 60 tools

-

Unique QR code generator 21 tools

Didn't find tool you were looking for?