LLM model deployment platform - AI tools

-

Kalavai Turn your devices into a scalable LLM platform

Kalavai Turn your devices into a scalable LLM platformKalavai offers a platform for deploying Large Language Models (LLMs) across various devices, scaling from personal laptops to full production environments. It simplifies LLM deployment and experimentation.

- Paid

- From 29$

-

Float16.cloud Your AI Infrastructure, Managed & Simplified.

Float16.cloud Your AI Infrastructure, Managed & Simplified.Float16.cloud provides managed GPU infrastructure and LLM solutions for AI workloads. It offers services like serverless GPU computing and one-click LLM deployment, optimizing cost and performance.

- Usage Based

-

Featherless Instant, Unlimited Hosting for Any Llama Model on HuggingFace

Featherless Instant, Unlimited Hosting for Any Llama Model on HuggingFaceFeatherless provides instant, unlimited hosting for any Llama model on HuggingFace, eliminating the need for server management. It offers access to over 3700+ compatible models starting from $10/month.

- Paid

- From 10$

-

LM Studio Discover, download, and run local LLMs on your computer

LM Studio Discover, download, and run local LLMs on your computerLM Studio is a desktop application that allows users to run Large Language Models (LLMs) locally and offline, supporting various architectures including Llama, Mistral, Phi, Gemma, DeepSeek, and Qwen 2.5.

- Free

-

AMOD AI models on demand.

AMOD AI models on demand.AMOD provides a platform for deploying various Large Language Models like Llama, Claude, Titan, and Mistral quickly via API, facilitating easy integration and scaling for businesses.

- Free Trial

- From 20$

-

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

CentML Better, Faster, Easier AI

CentML Better, Faster, Easier AICentML streamlines LLM deployment, offering advanced system optimization and efficient hardware utilization. It provides single-click resource sizing, model serving, and supports diverse hardware and models.

- Usage Based

-

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.

LlamaEdge The easiest, smallest and fastest local LLM runtime and API server.LlamaEdge is a lightweight and fast local LLM runtime and API server, powered by Rust & WasmEdge, designed for creating cross-platform LLM agents and web services.

- Free

-

Lora Integrate local LLM with one line of code.

Lora Integrate local LLM with one line of code.Lora provides an SDK for integrating a fine-tuned, mobile-optimized local Large Language Model (LLM) into applications with minimal setup, offering GPT-4o-mini level performance.

- Freemium

-

Neural Magic Deploy Open-Source LLMs to Production with Maximum Efficiency

Neural Magic Deploy Open-Source LLMs to Production with Maximum EfficiencyNeural Magic offers enterprise inference server solutions to streamline AI model deployment, maximizing computational efficiency and reducing costs on both GPU and CPU infrastructure.

- Contact for Pricing

-

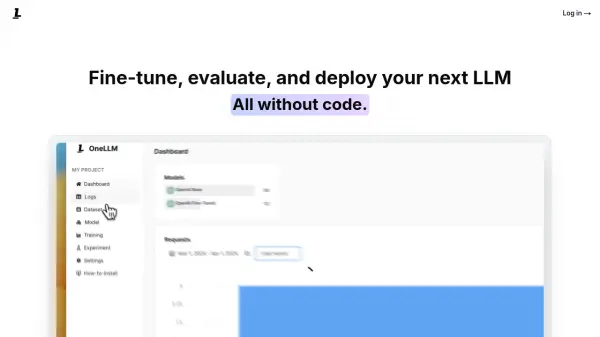

OneLLM Fine-tune, evaluate, and deploy your next LLM without code.

OneLLM Fine-tune, evaluate, and deploy your next LLM without code.OneLLM is a no-code platform enabling users to fine-tune, evaluate, and deploy Large Language Models (LLMs) efficiently. Streamline LLM development by creating datasets, integrating API keys, running fine-tuning processes, and comparing model performance.

- Freemium

- From 19$

-

Ollama Get up and running with large language models locally

Ollama Get up and running with large language models locallyOllama is a platform that enables users to run powerful language models like Llama 3.3, DeepSeek-R1, Phi-4, Mistral, and Gemma 2 on their local machines.

- Free

-

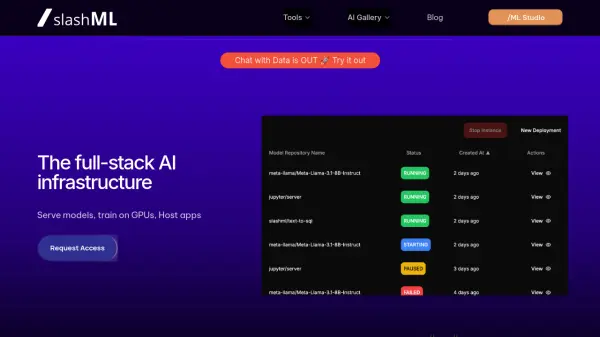

/ML The full-stack AI infra

/ML The full-stack AI infra/ML offers a full-stack AI infrastructure for serving large language models, training multi-modal models on GPUs, and hosting AI applications such as Streamlit, Gradio, and Dash, while providing cost observability.

- Contact for Pricing

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

speech to text for multiple languages 26 tools

-

Online meeting recorder 9 tools

-

email generator online 15 tools

-

ai powered technical documentation tool 43 tools

-

dating message help 11 tools

-

serverless AI API platform 21 tools

-

SaaS customer support software 44 tools

-

AI app development without coding 60 tools

-

Unique QR code generator 21 tools

Didn't find tool you were looking for?