LLM experimentation tools - AI tools

-

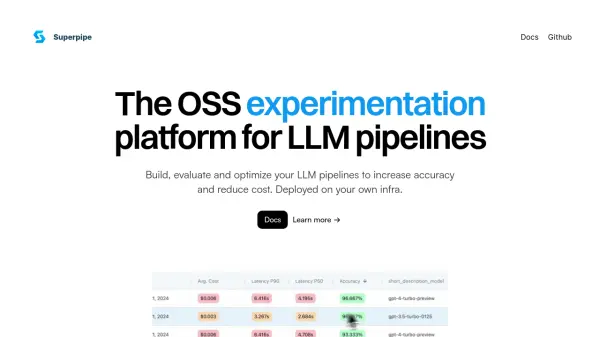

Superpipe The OSS experimentation platform for LLM pipelines

Superpipe The OSS experimentation platform for LLM pipelinesSuperpipe is an open-source experimentation platform designed for building, evaluating, and optimizing Large Language Model (LLM) pipelines to improve accuracy and minimize costs. It allows deployment on user infrastructure for enhanced privacy and security.

- Free

-

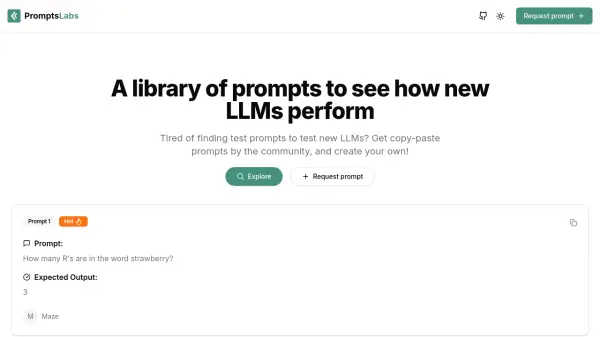

PromptsLabs A Library of Prompts for Testing LLMs

PromptsLabs A Library of Prompts for Testing LLMsPromptsLabs is a community-driven platform providing copy-paste prompts to test the performance of new LLMs. Explore and contribute to a growing collection of prompts.

- Free

-

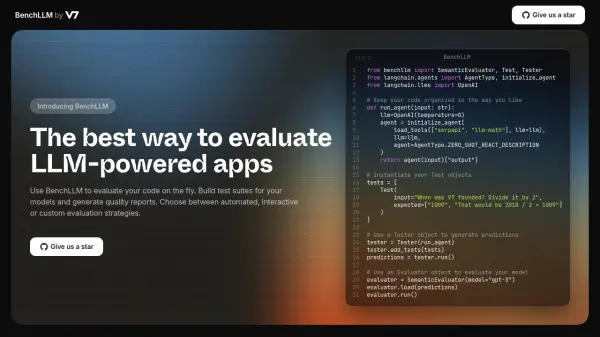

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

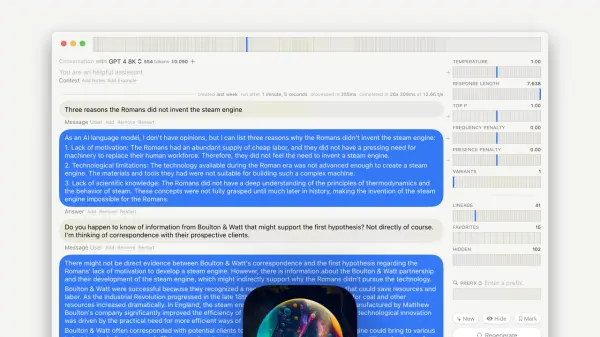

GPT–LLM Playground Your Comprehensive Testing Environment for Language Learning Models

GPT–LLM Playground Your Comprehensive Testing Environment for Language Learning ModelsGPT-LLM Playground is a macOS application designed for advanced experimentation and testing with Language Learning Models (LLMs). It offers features like multi-model support, versioning, and custom endpoints.

- Free

-

Gentrace Intuitive evals for intelligent applications

Gentrace Intuitive evals for intelligent applicationsGentrace is an LLM evaluation platform designed for AI teams to test and automate evaluations of generative AI products and agents. It facilitates collaborative development and ensures high-quality LLM applications.

- Usage Based

-

Literal AI Ship reliable LLM Products

Literal AI Ship reliable LLM ProductsLiteral AI streamlines the development of LLM applications, offering tools for evaluation, prompt management, logging, monitoring, and more to build production-grade AI products.

- Freemium

-

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

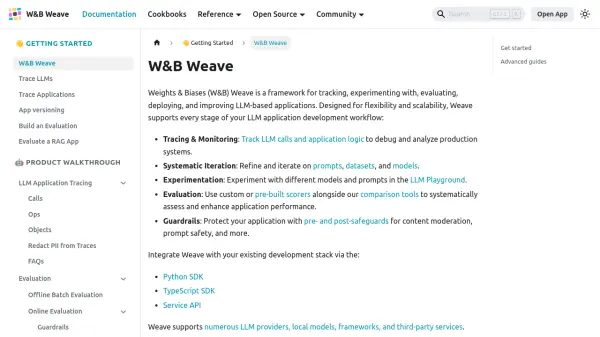

W&B Weave A Framework for Developing and Deploying LLM-Based Applications

W&B Weave A Framework for Developing and Deploying LLM-Based ApplicationsWeights & Biases (W&B) Weave is a comprehensive framework designed for tracking, experimenting with, evaluating, deploying, and enhancing LLM-based applications.

- Other

-

Conviction The Platform to Evaluate & Test LLMs

Conviction The Platform to Evaluate & Test LLMsConviction is an AI platform designed for evaluating, testing, and monitoring Large Language Models (LLMs) to help developers build reliable AI applications faster. It focuses on detecting hallucinations, optimizing prompts, and ensuring security.

- Freemium

- From 249$

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Explore More

-

Study tools for better grades 60 tools

-

API integration AI platform 60 tools

-

AI-powered business recommendations 44 tools

-

Social media management platform with analytics 50 tools

-

facebook audience research tool 9 tools

-

article summary tool 46 tools

-

Automatic alt text creator 9 tools

-

royalty free music maker 12 tools

-

Performance advertising platform 37 tools

Didn't find tool you were looking for?