What is Model Gateway?

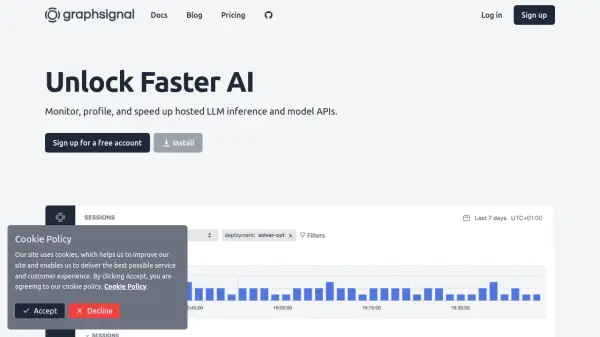

Model Gateway serves as a robust, open-source intermediary platform designed to manage and streamline AI inference requests between client applications and various AI service providers. Its primary function is to enhance the speed and reliability of interactions with AI models like OpenAI's GPT. It achieves this by actively monitoring the performance of different AI providers and regions, including the OpenAI Platform and all Azure OpenAI data centers.

The platform intelligently routes requests to the provider and region offering the fastest and most reliable response at any given moment, potentially delivering up to 15 times faster output tokens per second compared to static endpoints. Model Gateway ensures high availability and redundancy through load balancing and failover mechanisms across multiple endpoints. It integrates seamlessly with existing AI libraries and supports multiple providers such as Azure OpenAI, OpenAI, and Ollama, offering flexibility and scalability for users.

Features

- Fastest Possible Inference: Achieves up to 15x more output tokens per second via active routing.

- Load Balancing and Failover: Distributes load across multiple endpoints and regions for high availability and redundancy.

- Easy Integration: Compatible with major existing AI libraries (Python, Node.js, Java, PHP, curl).

- Multi-Provider Support: Connects seamlessly with Azure OpenAI, OpenAI, Ollama, and potentially more.

- Administrative Interface: User-friendly UI and GraphQL API for managing configurations and monitoring performance.

- Secure and Configurable: Securely handles API keys/tokens with advanced configuration options.

- Self-Hosted Option: Guarantees data privacy by allowing deployment on user infrastructure.

- Open-Source Core: Provides essential features for centralized and reliable AI inference for free.

Use Cases

- Accelerating AI application response times.

- Improving the reliability of AI model interactions.

- Managing API requests across multiple AI providers (OpenAI, Azure OpenAI, Ollama).

- Implementing failover strategies for critical AI integrations.

- Centralizing AI inference management for multiple client applications.

- Ensuring data privacy by self-hosting the AI request gateway.

FAQs

-

Which AI service providers are supported by Model Gateway?

Model Gateway connects seamlessly with Azure OpenAI, OpenAI, Ollama, and potentially more providers. -

What code updates are needed to integrate Model Gateway?

Integration typically requires minimal code changes, primarily updating the API endpoint to the Model Gateway URL and managing the API key as demonstrated in the provided code examples for various languages. -

How does Model Gateway achieve faster AI responses?

It monitors various AI providers (like OpenAI Platform and Azure OpenAI data centers) and intelligently routes your request to the fastest and most reliable provider and region available at that moment.

Related Queries

Helpful for people in the following professions

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.