LLM Token Counter - Alternatives & Competitors

Secure client-side token counting for popular language models

A sophisticated token counting tool that helps users manage token limits across multiple language models including GPT-4, Claude-3, and Llama-3, with client-side processing for maximum security.

Ranked by Relevance

-

1

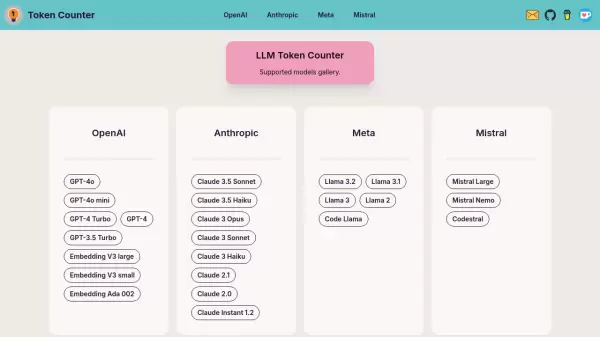

Token Counter Accurate token counting and cost estimation for popular LLM models

Token Counter Accurate token counting and cost estimation for popular LLM modelsA web-based tool that provides real-time token counting and cost estimation for various Large Language Models (LLMs), including OpenAI's GPT models and Anthropic's Claude models.

- Free

-

2

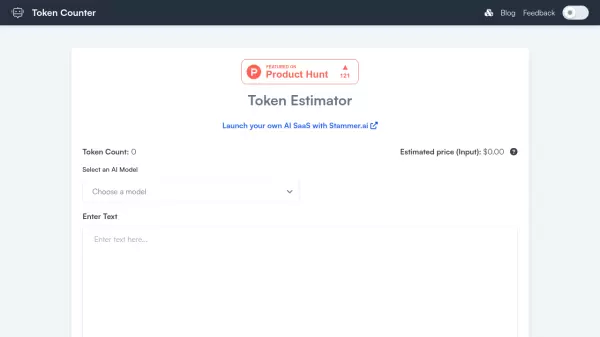

Prompt Token Counter Online Token Counter for OpenAI Models

Prompt Token Counter Online Token Counter for OpenAI ModelsPrompt Token Counter is an online tool for counting tokens in prompts for various OpenAI models like GPT-4o, GPT-4, and GPT-3.5 Turbo, ensuring efficient API usage.

- Free

-

3

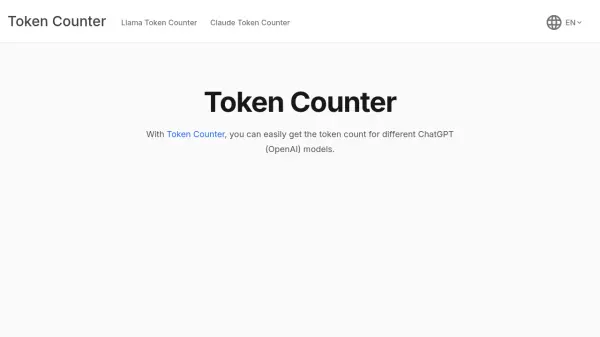

tokencounter.org Accurately Calculate Token Counts and Costs for OpenAI Models

tokencounter.org Accurately Calculate Token Counts and Costs for OpenAI ModelsToken Counter is a free online tool that converts text into tokens and estimates costs for various OpenAI models like GPT-4 and GPT-3.5, simplifying AI model usage and expense management.

- Free

-

4

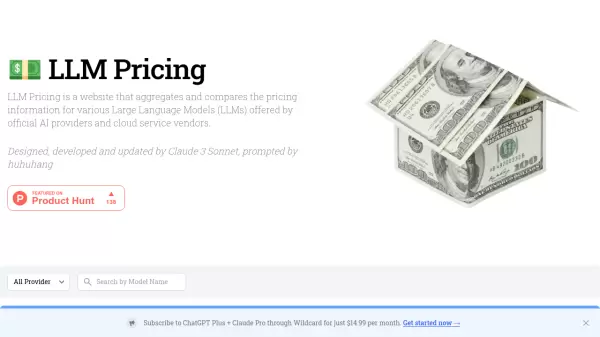

LLM Pricing A comprehensive pricing comparison tool for Large Language Models

LLM Pricing A comprehensive pricing comparison tool for Large Language ModelsLLM Pricing is a website that aggregates and compares pricing information for various Large Language Models (LLMs) from official AI providers and cloud service vendors.

- Free

-

5

WebLLM High-Performance In-Browser LLM Inference Engine

WebLLM High-Performance In-Browser LLM Inference EngineWebLLM enables running large language models (LLMs) directly within a web browser using WebGPU for hardware acceleration, reducing server costs and enhancing privacy.

- Free

-

6

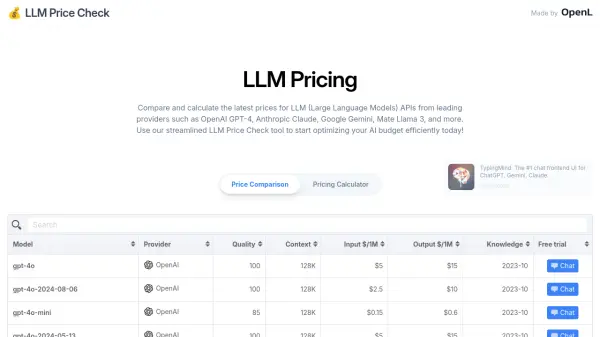

LLM Price Check Compare LLM Prices Instantly

LLM Price Check Compare LLM Prices InstantlyLLM Price Check allows users to compare and calculate prices for Large Language Model (LLM) APIs from providers like OpenAI, Anthropic, Google, and more. Optimize your AI budget efficiently.

- Free

-

7

Promptech The AI teamspace to streamline your workflows

Promptech The AI teamspace to streamline your workflowsPromptech is a collaborative AI platform that provides prompt engineering tools and teamspace solutions for organizations to effectively utilize Large Language Models (LLMs). It offers access to multiple AI models, workspace management, and enterprise-ready features.

- Paid

- From 20$

-

8

Compare AI Models AI Model Comparison Tool

Compare AI Models AI Model Comparison ToolCompare AI Models is a platform providing comprehensive comparisons and insights into various large language models, including GPT-4o, Claude, Llama, and Mistral.

- Freemium

-

9

BrowserAI Run Local LLMs Inside Your Browser

BrowserAI Run Local LLMs Inside Your BrowserBrowserAI is an open-source library enabling developers to run local Large Language Models (LLMs) directly within a user's browser, offering a privacy-focused AI solution with zero infrastructure costs.

- Free

-

10

Tiktokenizer Unlock the full potential of AI apps without stressing over tokens

Tiktokenizer Unlock the full potential of AI apps without stressing over tokensTiktokenizer simplifies AI app development by automatically tracking OpenAI API token usage per user, enabling transparent billing. It integrates seamlessly with OpenAI's Chat and Moderations APIs.

- Contact for Pricing

-

11

Nemotron AI Next-generation language models delivering unparalleled understanding, reasoning, and generation capabilities.

Nemotron AI Next-generation language models delivering unparalleled understanding, reasoning, and generation capabilities.Nemotron AI offers advanced language models (LLMs) with exceptional understanding, reasoning, and generation capabilities, supporting multilingual tasks and extended context windows.

- Contact for Pricing

-

12

AiPrice Token and Pricing Calculation for OpenAI LLM Models

AiPrice Token and Pricing Calculation for OpenAI LLM ModelsAiPrice calculates OpenAI API prompt token counts and provides accurate pricing estimations. It helps developers estimate costs before making API calls.

- Freemium

- From 2$

-

13

promptfoo Test & secure your LLM apps with open-source LLM testing

promptfoo Test & secure your LLM apps with open-source LLM testingpromptfoo is an open-source LLM testing tool designed to help developers secure and evaluate their language model applications, offering features like vulnerability scanning and continuous monitoring.

- Freemium

-

14

llmChef Perfect AI responses with zero effort

llmChef Perfect AI responses with zero effortllmChef is an AI enrichment engine that provides access to over 100 pre-made prompts (recipes) and leading LLMs, enabling users to get optimal AI responses without crafting perfect prompts.

- Paid

- From 5$

-

15

Langtail The low-code platform for testing AI apps

Langtail The low-code platform for testing AI appsLangtail is a comprehensive testing platform that enables teams to test and debug LLM-powered applications with a spreadsheet-like interface, offering security features and integration with major LLM providers.

- Freemium

- From 99$

-

16

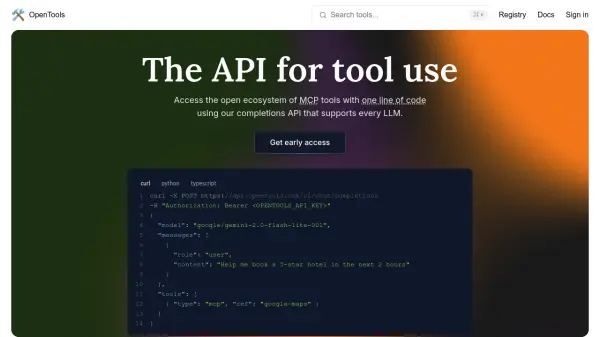

OpenTools The API for Enhanced LLM Tool Use

OpenTools The API for Enhanced LLM Tool UseOpenTools provides a unified API enabling developers to connect Large Language Models (LLMs) with a diverse ecosystem of tools, simplifying integration and management.

- Usage Based

-

17

Laminar The AI engineering platform for LLM products

Laminar The AI engineering platform for LLM productsLaminar is an open-source platform that enables developers to trace, evaluate, label, and analyze Large Language Model (LLM) applications with minimal code integration.

- Freemium

- From 25$

-

18

LangWatch Monitor, Evaluate & Optimize your LLM performance with 1-click

LangWatch Monitor, Evaluate & Optimize your LLM performance with 1-clickLangWatch empowers AI teams to ship 10x faster with quality assurance at every step. It provides tools to measure, maximize, and easily collaborate on LLM performance.

- Paid

- From 59$

-

19

Langfuse Open Source LLM Engineering Platform

Langfuse Open Source LLM Engineering PlatformLangfuse provides an open-source platform for tracing, evaluating, and managing prompts to debug and improve LLM applications.

- Freemium

- From 59$

-

20

BenchLLM The best way to evaluate LLM-powered apps

BenchLLM The best way to evaluate LLM-powered appsBenchLLM is a tool for evaluating LLM-powered applications. It allows users to build test suites, generate quality reports, and choose between automated, interactive, or custom evaluation strategies.

- Other

-

21

Lintrule Let the LLM review your code

Lintrule Let the LLM review your codeLintrule is a command-line tool that uses large language models to perform automated code reviews, enforce coding policies, and detect bugs beyond traditional linting capabilities.

- Usage Based

-

22

klu.ai Next-gen LLM App Platform for Confident AI Development

klu.ai Next-gen LLM App Platform for Confident AI DevelopmentKlu is an all-in-one LLM App Platform that enables teams to experiment, version, and fine-tune GPT-4 Apps with collaborative prompt engineering and comprehensive evaluation tools.

- Freemium

- From 30$

-

23

PromptMage A Python framework for simplified LLM-based application development

PromptMage A Python framework for simplified LLM-based application developmentPromptMage is a Python framework that streamlines the development of complex, multi-step applications powered by Large Language Models (LLMs), offering version control, testing capabilities, and automated API generation.

- Other

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.

Didn't find tool you were looking for?