What is Kolosal AI?

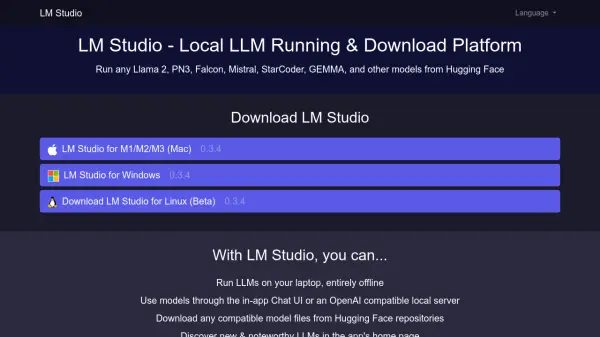

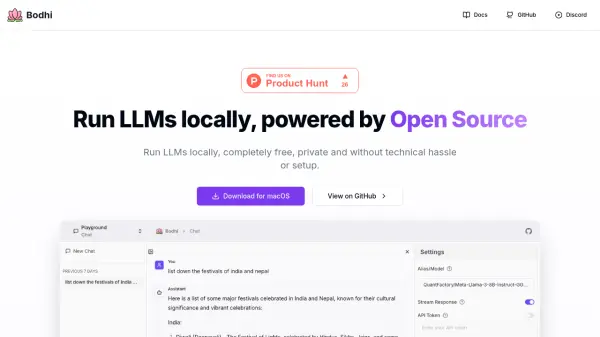

Kolosal AI provides a platform for users to harness the capabilities of large language models (LLMs) locally on their own devices. It is designed as a lightweight, open-source application that prioritizes speed, user privacy, and customization options. This approach eliminates the need for cloud dependencies and subscriptions, offering users complete control over their AI interactions.

The platform features an intuitive chat interface optimized for speed and efficiency when interacting with local LLM models. Users can download Kolosal AI to experience the benefits of local LLM technology, manage models, and engage in AI-powered conversations without relying on external servers. It positions itself as a powerful tool for individuals and businesses seeking private and customizable AI solutions.

Features

- Local LLM Execution: Train, run, and chat with LLMs directly on your device.

- Privacy Focused: Ensures complete privacy and control as data remains local.

- Open Source: Built on open-source principles, allowing for transparency and community contribution under the Apache 2.0 License.

- Lightweight Application: Designed to be resource-efficient.

- Intuitive Chat Interface: Provides a user-friendly interface for interacting with local models.

- Offline Capability: Functions without cloud dependencies, enabling offline use.

Use Cases

- Engaging in private AI conversations without cloud data transmission.

- Training and fine-tuning LLMs using local datasets.

- Developing and testing AI applications offline.

- Analyzing sensitive data with LLMs securely on-device.

- Creating custom AI tools and workflows with enhanced control.

FAQs

-

What is a local LLM?

A local Large Language Model (LLM) is an AI model that runs entirely on your own device, without needing to connect to external cloud servers. -

What are the advantages of using a local LLM over cloud-based models?

Advantages include enhanced privacy as data stays on your device, no subscription costs, offline usability, and greater control over the model and its usage. -

Does Kolosal AI local LLM platform work offline?

Yes, Kolosal AI is designed to work offline as it runs LLMs locally on your device without cloud dependencies. -

What hardware do I need to run a local LLM with Kolosal AI?

Specific hardware requirements are not detailed in the provided text, but running LLMs locally generally requires capable hardware (CPU, GPU, RAM) depending on the model size. -

Does Kolosal AI support Mac and Linux operating systems?

Currently, the download is specified for Windows. Support for Mac and Linux is not explicitly mentioned in the provided text.

Related Queries

Helpful for people in the following professions

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.