What is Inference.net?

Inference.net offers developers access to fast and scalable APIs for a variety of top-tier large language models, including DeepSeek V3, Llama 3.1, and Mistral Nemo. It emphasizes cost-efficiency, providing pay-per-token pricing that can be significantly lower than other providers. The platform ensures enterprise-grade reliability and performance, powered by highly optimized GPU infrastructure.

Designed for easy integration, Inference.net features OpenAI-compatible APIs, allowing developers to switch services quickly with minimal code changes. It supports diverse applications such as real-time chat interactions, large-scale batch inference processing for tasks like data analysis and synthetic data generation, and precise data extraction with schema validation. The service is built to scale from zero to billions of requests, catering to both small projects and large enterprise workloads.

Features

- API Access to Top Models: Provides APIs for frontier models like DeepSeek V3, Llama 3.1, Mistral Nemo.

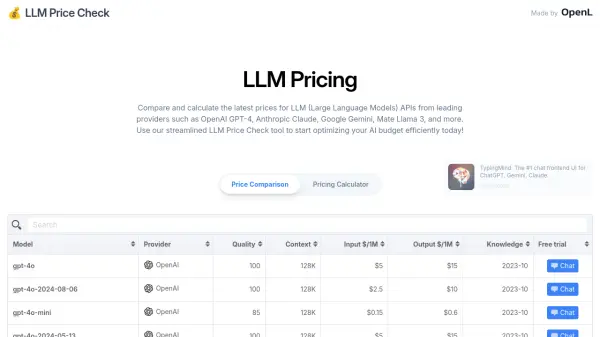

- Pay-Per-Token Pricing: Offers usage-based billing, potentially up to 90% lower cost than competitors.

- Scalable Infrastructure: Handles workloads from zero to billions of requests with high latency and throughput.

- OpenAI SDK Compatibility: Allows easy integration and switching with minimal code changes.

- Batch Inference API: Enables processing millions of requests asynchronously with a single API call.

- Data Extraction with Schema Validation: Supports precise extraction of structured data using JSON Schema.

- Real-Time Chat APIs: Delivers powerful serverless inference APIs for chat applications.

- Developer Tooling Support: Integrates with LangChain, LlamaIndex, Pydantic, Zod, and offers SDKs (TypeScript, Python).

Use Cases

- Developing AI-powered real-time chat applications.

- Performing large-scale batch processing for data analysis.

- Generating synthetic data at scale.

- Processing large volumes of documents asynchronously.

- Extracting structured data from unstructured text with schema validation.

- Building applications requiring access to various LLMs via a unified API.

- Reducing inference costs for existing AI applications.

FAQs

-

How does Inference.net pricing compare to other providers?

Inference.net states its pay-per-token pricing is up to 90% lower than other providers for the same models. -

Is Inference.net compatible with existing OpenAI integrations?

Yes, the APIs are OpenAI-compatible, requiring only a minimal code change (e.g., changing the base URL and API key) for integration. -

What kind of models does Inference.net offer?

Inference.net provides access to various large language models, including versions of DeepSeek, Meta Llama, Mistral, Google Gemma, and Qwen. -

Can I process large amounts of data using Inference.net?

Yes, Inference.net offers a Batch Inference API designed for processing millions of requests asynchronously, suitable for large-scale data analysis, synthetic data generation, and document processing. -

Does Inference.net offer free trials or credits?

Inference.net provides $25 in free credits for new users to explore models via their Playground.

Related Queries

Helpful for people in the following professions

Inference.net Uptime Monitor

Average Uptime

99.86%

Average Response Time

406.67 ms

Featured Tools

Join Our Newsletter

Stay updated with the latest AI tools, news, and offers by subscribing to our weekly newsletter.